Introduction

The selection of a Graphics Processing Unit (GPU) is a crucial decision for anyone involved in deep learning. The right GPU can drastically reduce training times, enable more complex models, and expedite research and development. This guide dives deep into the factors that influence GPU performance and selection, with a focus on NVIDIA's latest Ampere architecture. Whether you're building a new system from scratch or upgrading an existing one, understanding these factors will help you make an informed decision that matches both your computational needs and budget constraints.

Deep learning models are becoming increasingly complex, pushing the boundaries of hardware capabilities. The GPU you choose directly affects the efficiency and speed of your training processes. It’s not just about raw power; factors like memory bandwidth, processor architecture, and software compatibility play significant roles. This guide aims to demystify the complexities of GPU technology, providing clear insights into how each component impacts deep learning tasks.

We will explore various aspects of GPUs, from the basics of GPU architecture to advanced features specific to the NVIDIA Ampere series. By the end of this post, you will have a comprehensive understanding of what makes a GPU suitable for deep learning, how to evaluate GPUs based on your specific needs, and what the future holds for GPU technology in this rapidly evolving field.

Deep Dive into GPU Basics

At the core of every GPU are its processing cores, which handle thousands of threads simultaneously, making them ideal for the parallel processing demands of deep learning. Understanding the architecture of these cores, how they manage data, and their interaction with other GPU components is foundational. Each core is designed to handle specific types of calculations efficiently, which is why GPUs drastically outperform CPUs in tasks like matrix multiplication, a common operation in deep learning algorithms.

Memory plays a pivotal role in GPU performance. GPUs have their own dedicated memory, known as VRAM, which is crucial for storing the intermediate data required during model training. The amount and speed of VRAM can significantly affect how quickly a model can be trained. Memory bandwidth, the rate at which data can be read from or written to the memory, is equally critical. Higher bandwidth allows for faster data transfer, reducing bottlenecks and improving overall computational efficiency.

Another fundamental aspect of GPU architecture is the memory hierarchy, which includes various types of cache (L1, L2) and shared memory. These memory types have different speeds and capacities, impacting how quickly data can be accessed during computations. An effective GPU for deep learning optimizes this hierarchy to minimize data retrieval times, which can be a major limiting factor in training speeds.

The Pivotal Role of Tensor Cores

Tensor Cores are specialized hardware found in modern NVIDIA GPUs, designed specifically to accelerate the performance of tensor operations in deep learning. These cores significantly enhance the ability to perform matrix multiplications efficiently, reducing the training time for deep neural networks. The introduction of Tensor Cores has shifted the landscape of deep learning hardware, offering improvements that can be several folds over previous GPU generations.

The effectiveness of Tensor Cores stems from their ability to handle mixed-precision computing. They can perform calculations in lower precision, which is generally sufficient for deep learning, allowing more operations to be carried out simultaneously. This capability not only speeds up processing times but also reduces power consumption, which is crucial for building energy-efficient models and systems.

To fully leverage Tensor Cores, it's essential to understand their integration into the broader GPU architecture. They work in conjunction with traditional CUDA cores by handling specific tasks that are optimized for AI applications. As deep learning models become increasingly complex, the role of Tensor Cores in achieving computational efficiency becomes more pronounced, making GPUs equipped with these cores highly desirable for researchers and developers.

Memory Bandwidth and Cache Hierarchy in GPUs

Memory bandwidth is a critical factor in GPU performance, especially in the context of deep learning where large datasets and model parameters need constant transferring. The higher the memory bandwidth, the more data can be processed in parallel, leading to faster training and inference times. GPUs designed for deep learning often feature enhanced memory specifications to support these needs, enabling them to handle extensive computations required by modern neural networks.

The cache hierarchy in a GPU plays a significant role in optimizing data retrieval and storage processes during computation. L1 and L2 caches serve as temporary storage for frequently accessed data, reducing the need to fetch data from slower, larger memory sources. Understanding how different GPU models manage their cache can provide insights into their efficiency. A well-optimized cache system minimizes latency and maximizes throughput, critical for maintaining high performance in compute-intensive tasks like training large models.

Shared memory is another crucial component, acting as an intermediary between the fast registers and the slower global memory. It allows multiple threads to access data quickly and efficiently, which is particularly important when multiple operations need to access the same data concurrently. Optimizing the use of shared memory can significantly reduce the time it takes to perform operations, thereby enhancing the overall performance of the GPU.

Evaluating GPU Performance for Deep Learning

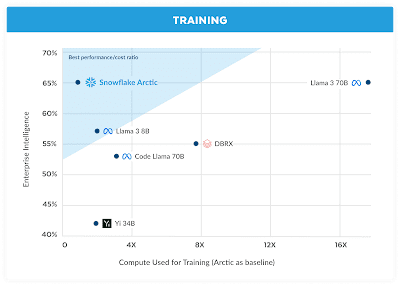

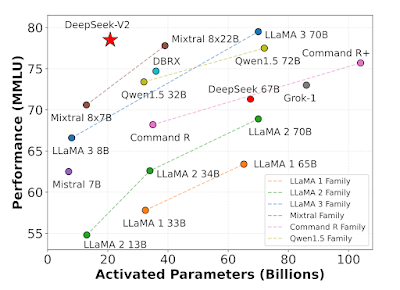

When choosing a GPU for deep learning, it’s important to consider not just the theoretical specifications, but also real-world performance benchmarks. Benchmarks can provide a more accurate indication of how a GPU will perform under specific conditions. It’s essential to look at benchmarks that reflect the type of work you’ll be doing, as performance can vary widely depending on the task and the software framework used.

Understanding performance metrics such as TFLOPS, memory bandwidth, and power efficiency is crucial. TFLOPS (tera floating-point operations per second) measures the computational speed of a GPU and is a key indicator of its ability to handle complex mathematical calculations quickly. However, this metric should be balanced with considerations of power consumption and efficiency, particularly in environments where energy consumption is a concern.

Finally, it’s important to evaluate the ecosystem surrounding a GPU. This includes the availability of software libraries, community support, and compatibility with other hardware and software tools. NVIDIA's CUDA toolkit, for instance, offers a comprehensive suite of development tools that can significantly accelerate development times and improve the efficiency of your deep learning projects.

Conclusion

Selecting the right GPU for deep learning involves a careful analysis of both technical specifications and practical considerations. By understanding the fundamental aspects of GPU architecture, the special functions of Tensor Cores, and the importance of memory management, you can make a well-informed decision that maximizes both performance and cost-efficiency. As the field of deep learning continues to evolve, staying informed about the latest developments in GPU technology will be crucial for anyone looking to leverage the full potential of their deep learning models.