SWE-Agent

SWE-Agent introduces a novel approach to enhancing the decision-making capabilities of AI agents. By integrating social and environmental awareness, SWE-Agent aims to create more adaptive and context-sensitive AI. The paper highlights the potential of this approach in applications such as autonomous driving and human-robot interaction, where understanding the surrounding environment and social cues are crucial for optimal performance.

Mixture-of-Depths

Mixture-of-Depths presents an innovative method for improving deep learning models by dynamically adjusting the depth of neural networks during training. This technique allows models to allocate computational resources more efficiently, leading to improved performance and faster convergence. The research demonstrates significant improvements in various benchmark tasks, suggesting that Mixture-of-Depths could be a valuable tool for training more efficient and powerful models.

Many-shot Jailbreaking

Many-shot Jailbreaking explores the vulnerabilities of AI models when exposed to numerous adversarial examples. The paper investigates how large language models can be manipulated using many-shot prompts to bypass restrictions and produce undesired outputs. This research underscores the importance of robust security measures and highlights the challenges in developing resilient AI systems.

Visualization-of-Thought

Visualization-of-Thought introduces a framework for visualizing the internal processes of neural networks. By mapping the activation patterns and decision-making pathways, this approach provides insights into how models process information and make decisions. The paper argues that such visualizations can enhance our understanding of AI behavior, leading to more interpretable and trustworthy models.

Advancing LLM Reasoning

Advancing LLM Reasoning focuses on improving the reasoning capabilities of large language models (LLMs). The paper presents new architectures and training methodologies that enhance the logical reasoning skills of LLMs. The findings indicate that these advancements lead to better performance in tasks requiring complex reasoning, such as mathematical problem-solving and logical inference.

Representation Finetuning for LMs

Representation Finetuning for LMs explores techniques for fine-tuning the internal representations of language models. By optimizing these representations, the research aims to improve the overall performance of LMs in various natural language processing tasks. The paper presents empirical results showing that fine-tuned models achieve higher accuracy and robustness compared to their baseline counterparts.

CodeGemma

CodeGemma introduces a novel approach to code generation and understanding using deep learning. The paper presents a framework that leverages both supervised and unsupervised learning techniques to enhance code comprehension and generation capabilities. The results demonstrate significant improvements in code synthesis tasks, suggesting potential applications in software development and automated programming.

Infini-Transformer

Infini-Transformer proposes a new architecture that extends the capabilities of traditional transformers by incorporating infinite-depth networks. This approach allows the model to process information at multiple scales and levels of abstraction, leading to better performance in tasks such as language modeling and machine translation. The paper presents experimental results showcasing the superior performance of Infini-Transformer compared to existing models.

Overview of Multilingual LLMs

Overview of Multilingual LLMs provides a comprehensive survey of recent advancements in multilingual language models. The paper reviews various architectures, training techniques, and evaluation metrics used in developing multilingual LLMs. It also highlights the challenges and future directions in this field, emphasizing the importance of building models that can understand and generate text in multiple languages.

LM-Guided Chain-of-Thought

LM-Guided Chain-of-Thought introduces a new reasoning framework that leverages language models to guide the thought process of AI systems. By using LMs to generate intermediate reasoning steps, this approach enhances the problem-solving capabilities of AI models. The paper presents case studies demonstrating the effectiveness of this framework in complex reasoning tasks.

The Physics of Language Models

The Physics of Language Models explores the analogies between physical systems and language models. The paper draws parallels between concepts in physics, such as energy minimization and phase transitions, and the behavior of language models. This interdisciplinary approach provides new insights into the functioning of LMs and suggests novel ways to improve their performance.

Best Practices and Lessons on Synthetic Data

Best Practices and Lessons on Synthetic Data offers a detailed analysis of the use of synthetic data in training machine learning models. The paper discusses the benefits and challenges of using synthetic data, presents best practices for generating and using synthetic datasets, and shares lessons learned from real-world applications. The findings highlight the potential of synthetic data to enhance model performance and generalization.

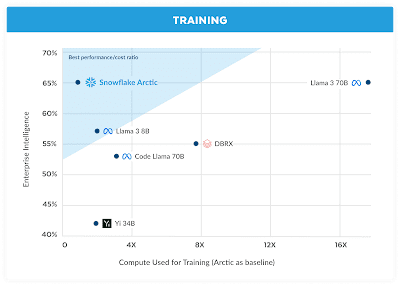

Llama 3

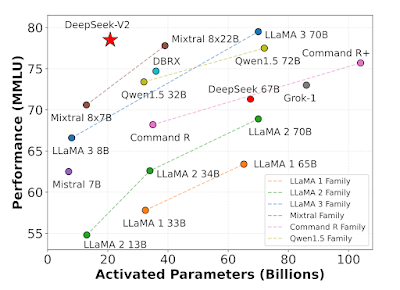

Llama 3 introduces the latest iteration of the Llama language model, featuring significant improvements in size, architecture, and training methodologies. The paper details the advancements in Llama 3 that lead to better performance across a wide range of natural language processing tasks. The results show that Llama 3 outperforms previous versions and sets new benchmarks in the field.

Mixtral 8x22B

Mixtral 8x22B presents a new model that combines multiple transformer architectures to achieve state-of-the-art performance. By leveraging a mixture of experts approach, Mixtral 8x22B dynamically selects the most suitable transformer for each task, leading to improved efficiency and accuracy. The paper provides extensive empirical evidence demonstrating the advantages of this approach.

A Survey on RAG

A Survey on RAG provides a comprehensive overview of retrieval-augmented generation (RAG) models. The paper reviews the current state of RAG research, including model architectures, training techniques, and applications. It also identifies key challenges and future directions in the development of RAG models.

How Faithful are RAG Models

How Faithful are RAG Models? investigates the faithfulness and reliability of retrieval-augmented generation models. The paper presents a series of experiments designed to evaluate the accuracy and consistency of RAG models in generating responses based on retrieved information. The findings highlight the need for improved evaluation metrics and techniques to ensure the trustworthiness of RAG models.

Emerging AI Agent Architectures

Emerging AI Agent Architectures explores the latest developments in the design and implementation of AI agents. The paper discusses new architectural paradigms, such as modular and hierarchical agents, that aim to enhance the flexibility and scalability of AI systems. It also presents case studies demonstrating the practical applications of these emerging architectures.

Chinchilla Scaling: A replication attempt

Chinchilla Scaling: A replication attempt focuses on replicating the scaling laws observed in the Chinchilla model. The paper presents a detailed analysis of the replication process, including the challenges encountered and the results obtained. The findings provide valuable insights into the scalability of large language models and the factors that influence their performance.

Phi-3

Phi-3 introduces a new language model that combines principles from both machine learning and cognitive science. The paper presents the design and implementation of Phi-3, highlighting its ability to understand and generate human-like text. The results show that Phi-3 achieves state-of-the-art performance in several natural language processing benchmarks.

OpenELM

OpenELM presents a framework for open-ended learning models (ELMs) that can adapt and evolve over time. The paper discusses the theoretical foundations of open-ended learning and provides practical examples of how OpenELM can be applied to various tasks. The findings suggest that open-ended learning can lead to more robust and flexible AI systems.

AutoCrawler

AutoCrawler introduces an automated framework for web crawling and data extraction. The paper presents the design and implementation of AutoCrawler, demonstrating its ability to efficiently gather and process large amounts of web data. The results highlight the potential of AutoCrawler to support applications such as search engines and data mining.

Self-Evolution of LLMs

Self-Evolution of LLMs explores techniques for enabling large language models to evolve and improve over time. The paper presents a framework for self-evolution, where models can learn from new data and adapt their internal representations. The findings indicate that self-evolving LLMs can achieve better performance and generalization compared to static models.

AI-powered Gene Editors

AI-powered Gene Editors presents a novel application of AI in the field of gene editing. The paper discusses the use of machine learning models to design and optimize gene editing tools, such as CRISPR. The results show that AI-powered gene editors can achieve higher precision and efficiency, paving the way for advancements in genetic engineering and biotechnology.

Make Your LLM Fully Utilize the Context

Make Your LLM Fully Utilize the Context focuses on techniques for enhancing the contextual understanding of large language models. The paper presents methods for improving the way LLMs process and utilize context in generating responses. The findings suggest that these techniques lead to better performance in tasks requiring deep contextual comprehension, such as dialogue systems and machine translation.