In the realm of technology, machine learning (ML) stands out as a field of ceaseless innovation and transformative potential. Jeff Dean from Google, in his comprehensive talk, elucidates the remarkable journey and future possibilities of machine learning, highlighting the collaborative efforts of many at Google. This post encapsulates the essence of these developments, offering insights into how machine learning is reshaping our interaction with technology, and what lies ahead.

The Evolution of Machine Learning

Looking back a decade or so, the capabilities of computers in areas like speech recognition, image understanding, and natural language processing were notably limited. However, today, we expect computers to perceive the world around us more accurately, thanks to significant advancements in machine learning. This progress has not only improved existing capabilities but has also introduced new functionalities, revolutionizing fields across the board.

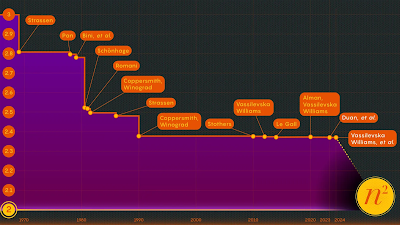

Scaling and Specialized Hardware

A key observation in recent years is the benefit of scaling - leveraging larger datasets, more sophisticated models, and especially, specialized hardware designed for machine learning tasks. This has led to unprecedented improvements in accuracy and efficiency. Google's development of Tensor Processing Units (TPUs) exemplifies this, offering specialized accelerators that dramatically enhance the performance of machine learning models while reducing costs and energy consumption.

Breakthroughs in Language Understanding

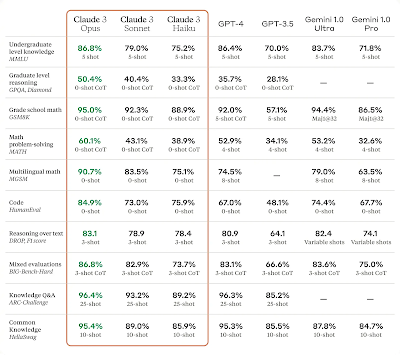

Perhaps one of the most notable areas of advancement is in language understanding. Models like Google's BERT and OpenAI's GPT series have demonstrated remarkable abilities in generating human-like text, understanding complex queries, and even translating languages with a high degree of accuracy. These models have moved beyond simple categorization tasks to understanding and generating nuanced language, showcasing the potential for more natural and effective human-computer interaction.

Multimodal Models: The Future of Machine Learning

Looking forward, the integration of multiple modes of data (text, image, audio, and video) into single models represents a significant leap forward. Jeff Dean highlights projects like Google's Gemini, which aim to understand and generate content across different modalities, offering a glimpse into a future where computers can understand the world in a more holistic manner. This multimodal approach opens up new possibilities for applications in education, creativity, and beyond.

The Impact of Machine Learning Across Sectors

The influence of machine learning extends far beyond tech companies. It is transforming healthcare, with models capable of diagnosing diseases from medical images at a level comparable to or even surpassing human experts. In environmental science, machine learning is being used to model climate change impacts more accurately. And in everyday life, features like Google's Night Sight and Portrait Mode in smartphones are powered by machine learning, enhancing our experiences and interactions with technology.

Ethical Considerations and the Future

As machine learning technologies become increasingly integrated into our lives, addressing ethical considerations becomes paramount. Issues like data privacy, algorithmic bias, and the environmental impact of training large models are areas of active research and debate. The development of machine learning principles, such as those proposed by Google, emphasizes the importance of creating technology that is beneficial, equitable, and accountable.

Conclusion

The field of machine learning is at an exciting juncture, with advancements in hardware, algorithms, and data processing leading to breakthroughs across various domains. As we look to the future, the integration of multimodal data, alongside considerations for ethical and responsible use, will be crucial in realizing the full potential of machine learning. The journey thus far has been remarkable, and the path ahead promises even greater opportunities for innovation and transformation.