Introduction to DeepSeek-V2

In the rapidly evolving world of artificial intelligence, the quest for more powerful and efficient language models is ceaseless. DeepSeek-V2 emerges as a pioneering solution, introducing a robust Mixture-of-Experts (MoE) architecture that marries economical training with high-efficiency inference. This model boasts a staggering 236 billion parameters, yet optimizes resource use by activating only 21 billion parameters per token. This design not only enhances performance but also significantly cuts down on both the training costs and the memory footprint during operation.

Revolutionary Architectural Enhancements

DeepSeek-V2 leverages cutting-edge architectural enhancements that redefine how large language models operate. At its core are two pivotal technologies: Multi-head Latent Attention (MLA) and the DeepSeekMoE framework. MLA streamlines the key-value cache mechanism, reducing its size by over 93%, which greatly speeds up inference times without sacrificing accuracy. On the other hand, DeepSeekMoE facilitates the training of powerful models by employing a sparse computation strategy that allows for more targeted and efficient parameter use.

Training Economies and Efficiency

One of the standout features of DeepSeek-V2 is its ability to reduce training costs by an impressive 42.5%. This is achieved through innovative optimizations that minimize the number of computations needed during training. Furthermore, DeepSeek-V2 supports an extended context length of up to 128,000 tokens, which is a significant leap over traditional models, making it adept at handling complex tasks that require deeper contextual understanding.

Pre-training and Fine-Tuning

DeepSeek-V2 was pretrained on a diverse, high-quality multi-source corpus that includes a substantial increase in the volume of data, particularly in Chinese. This corpus now totals over 8.1 trillion tokens, providing a rich dataset that significantly contributes to the model’s robustness and versatility. Following pretraining, the model underwent Supervised Fine-Tuning (SFT) and Reinforcement Learning (RL), further enhancing its alignment with human-like conversational capabilities and preferences.

Comparative Performance and Future Applications

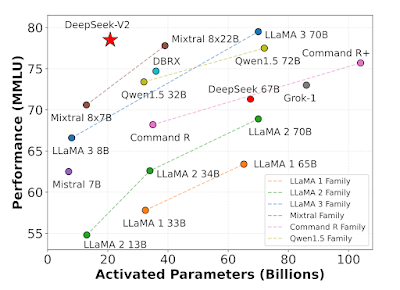

In benchmarks, DeepSeek-V2 stands out for its superior performance across multiple languages and tasks, outperforming its predecessors and other contemporary models. It offers compelling improvements in training and inference efficiency that make it a valuable asset for a range of applications, from automated customer service to sophisticated data analysis tasks. Looking ahead, the potential applications of DeepSeek-V2 in areas like real-time multilingual translation and automated content generation are incredibly promising.

Conclusion and Forward Look

DeepSeek-V2 represents a significant advancement in the field of language models. Its innovative architecture and cost-effective training approach set new standards for what is possible in AI technologies. As we look to the future, the ongoing development of models like DeepSeek-V2 will continue to push the boundaries of machine learning, making AI more accessible and effective across various industries.

Model

No comments:

Post a Comment