Large language models (LLMs) have become a transformative force in various industries. Their ability to process and generate human-like text unlocks a vast array of applications, from writing different kinds of creative content to automating tasks and improving communication. However, traditional LLMs have been hampered by their high training costs, often requiring millions or even hundreds of millions of dollars. This has limited access to these powerful tools, particularly for smaller businesses and organizations.

Snowflake is revolutionizing the LLM landscape with the introduction of Snowflake Arctic, a groundbreaking model specifically designed for enterprise use cases. Arctic breaks the cost barrier by achieving efficient training while delivering top-tier performance on tasks critical to businesses. This blog post dives deeper into the innovative features of Snowflake Arctic and explores its potential to democratize enterprise AI.

Efficiently Intelligent: Achieving More with Less

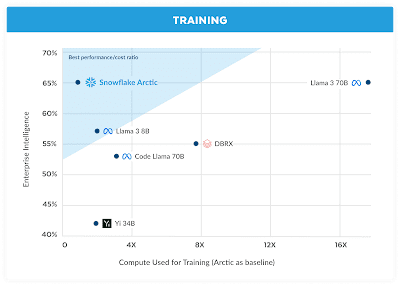

Traditionally, training LLMs necessitates massive computational resources, translating to exorbitant costs. Snowflake Arctic addresses this challenge by adopting a unique and efficient training approach. It leverages a Dense-MoE Hybrid transformer architecture, combining a dense transformer model with a residual MoE MLP. This ingenious design allows Arctic to achieve high accuracy with a lower number of active parameters during training, significantly reducing the required computational resources.

The secret behind Arctic's efficiency lies in its strategic use of experts. Most MoE models employ a limited number of experts. In contrast, Arctic boasts a much larger pool of experts, allowing it to distribute tasks more effectively and improve overall model quality. Additionally, Arctic utilizes a top-2 gating mechanism, judiciously selecting a smaller subset of active parameters from the vast pool of experts during training. This approach optimizes the training process by focusing on the most relevant parameters, further reducing computational demands.

Enterprise-Focused for Real-World Impact

While many LLMs prioritize generic capabilities, Snowflake Arctic takes a different approach. It is specifically designed to excel at tasks crucial for enterprise users. These tasks include:

- SQL Generation: Arctic can translate natural language instructions into clear and accurate SQL queries, empowering business users to extract valuable insights from data without extensive technical expertise.

- Code Completion and Instruction Following: Developers can leverage Arctic's capabilities to streamline coding workflows by automatically completing code snippets and precisely following complex instructions.

By excelling at these mission-critical tasks, Snowflake Arctic empowers businesses to automate processes, improve efficiency, and unlock the full potential of their data.

Truly Open: Empowering Collaboration and Innovation

Snowflake Arctic is not just efficient and enterprise-focused; it's also truly open-source. Snowflake releases the model's weights and code under the permissive Apache 2.0 license, allowing anyone to freely use and modify it. Additionally, Snowflake is committed to open research, sharing valuable insights and data recipes used to develop Arctic. This open approach fosters collaboration within the AI community and accelerates advancements in LLM technology.

The open-source nature of Arctic offers several significant benefits:

- Reduced Costs: Businesses and organizations can leverage Arctic's capabilities without hefty licensing fees, making enterprise-grade AI more accessible.

- Customization: Developers can fine-tune Arctic to address specific needs and workflows, enhancing its utility for unique enterprise applications.

- Faster Innovation: Open access to the model and research findings allows the broader AI community to contribute to its development and refinement, accelerating the pace of innovation.

Getting Started with Snowflake Arctic

Snowflake Arctic is readily available for exploration and experimentation. Here are some ways to get started:

- Hugging Face: Download Arctic directly from the popular Hugging Face platform.

- Snowflake Cortex: Snowflake customers can access Arctic for free through Snowflake Cortex for a limited period.

- Model Gardens and Catalogs: Leading cloud platforms like Amazon Web Services (AWS), Microsoft Azure, and NVIDIA API catalog will soon offer Arctic within their respective model gardens and catalogs.

- Interactive Demos: Experience Arctic firsthand through live demos hosted on Streamlit Community Cloud and Hugging Face Streamlit Spaces.

Snowflake is also hosting an Arctic-themed Community Hackathon, providing mentorship and credits to participants who build innovative applications powered by Arctic.

Conclusion: A New Era for Enterprise AI

Snowflake Arctic represents a significant leap forward in LLM technology. By achieving exceptional efficiency, enterprise-focused capabilities, and a truly open-source approach, Arctic empowers businesses to unlock the transformative potential of AI at a fraction of the traditional cost. As the AI landscape continues to evolve, Snowflake Arctic is poised to democratize access to advanced LLMs, ushering in a new era of intelligent automation and data-driven decision-making for enterprises of all sizes.

In addition to the information above, the provided URL also mentions that Snowflake plans to release a series of blog posts delving deeper into specific aspects of Arctic, such as its research journey, data composition techniques, and advanced MoE architecture. These future posts will likely provide even more granular

Model

No comments:

Post a Comment