In the tapestry of technological advancements, few threads are as vibrant and transformative as the development of large language models (LLMs). These sophisticated AI systems have quickly ascended from experimental novelties to cornerstone technologies, deeply influencing how we interact with information, communicate, and even think. From crafting articles to powering conversational AI, LLMs like Google's T5 and OpenAI's GPT-3 have demonstrated capabilities that were once relegated to the realm of science fiction. But what exactly are these models, and why are they considered revolutionary? This blog post delves into the genesis, evolution, applications, and the multifaceted impacts of large language models, exploring how they are reshaping the landscape of artificial intelligence and offering a glimpse into a future where human-like textual understanding is just a query away.

1. The Genesis of Large Language Models

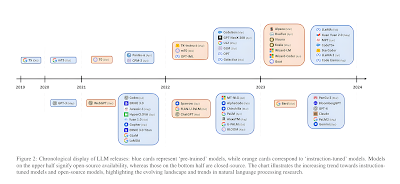

The realm of artificial intelligence has been profoundly transformed by the advent of large language models (LLMs), such as Google's T5 and OpenAI's GPT-3. These colossal models are not just tools for text generation; they represent a leap forward in how machines understand nuances and complexities of human language. Unlike their predecessors, LLMs can digest and generate text with a previously unattainable level of sophistication. The introduction of the transformer architecture was a game-changer, featuring models that treat words in relation to all other words in a sentence or paragraph, rather than processing one word at a time.

These transformative technologies have catapulted the field of natural language processing into a new era. T5, for instance, is designed to handle any text-based task by converting them into a uniform style of input and output, making the model incredibly versatile. GPT-3, on the other hand, uses its 175 billion parameters to generate text that can be startlingly human-like, capable of composing poetry, translating languages, and even coding programs. The growth trajectory of these models in terms of size and scope highlights an ongoing trend: the larger the model, the broader and more nuanced the tasks it can perform.

2. Advancements in Model Architecture and Training

Recent years have seen groundbreaking advancements in the architecture and training of large language models. Innovations such as sparse attention mechanisms enable these models to focus on the most relevant parts of text, drastically reducing the computational load. Meanwhile, the Mixture-of-Experts (MoE) approach tailors model responses by dynamically selecting from a pool of specialized sub-models, depending on the task at hand. This not only enhances efficiency but also improves the model's output quality across various domains.

Training techniques, too, have seen significant evolution. The shift towards few-shot and zero-shot learning paradigms, where models perform tasks they've never explicitly seen during training, is particularly revolutionary. These methods underscore the models' ability to generalize from limited data, simulating a more natural learning environment akin to human learning processes. For instance, GPT-3's ability to translate between languages it wasn't directly trained on is a testament to the power of these advanced training strategies. Such capabilities indicate a move towards more adaptable, universally capable AI systems.

3. Applications Across Domains

The versatility of LLMs is perhaps most vividly illustrated by their wide range of applications across various sectors. In healthcare, LLMs assist in processing and summarizing medical records, providing faster access to crucial patient information. They also generate and personalize communication between patients and care providers, enhancing the healthcare experience. In the media industry, LLMs are used to draft articles, create content for social media, and even script videos, scaling content creation like never before.

Customer service has also been revolutionized by LLMs. AI-driven chatbots powered by models like GPT-3 can engage in human-like conversations, resolving customer inquiries with increasing accuracy and contextual awareness. This not only improves customer experience but also optimizes operational efficiency by handling routine queries that would otherwise require human intervention. These applications are just the tip of the iceberg, as LLMs continue to find new uses in fields ranging from legal services to educational tech, where they can personalize learning and access to information.

4. Challenges and Ethical Considerations

Despite their potential, LLMs come with their own set of challenges and ethical concerns. The immense computational resources required to train such models pose significant environmental impacts, raising questions about the sustainability of current AI practices. Moreover, the data used to train these models often come from the internet, which can include biased or sensitive information. This leads to outputs that could perpetuate stereotypes or inaccuracies, highlighting the need for rigorous, ethical oversight in the training processes.

Furthermore, issues such as the model's potential use in creating misleading information or deepfakes are of great concern. Ensuring that these powerful tools are used responsibly necessitates continuous dialogue among technologists, policymakers, and the public. As these models become more capable, the importance of aligning their objectives with human values and ethics cannot be overstated, requiring concerted efforts to implement robust governance frameworks.

Conclusion

The development of large language models is undoubtedly one of the most significant advancements in the field of artificial intelligence. As they evolve, these models hold the promise of redefining our interaction with technology, making AI more integrated into our daily lives. The journey of LLMs is far from complete, but as we look to the future, the potential for these models to further bridge the gap between human and machine intelligence is both exciting and, admittedly, a bit daunting.

No comments:

Post a Comment