In the world of machine learning, embeddings are a powerful tool for capturing the meaning behind data. Ollama now supports embedding models, allowing you to build innovative Retrieval Augmented Generation (RAG) applications that combine text prompts with existing knowledge.

What are Embedding Models?

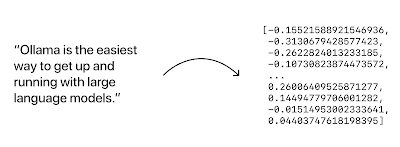

Embedding models are like translators for your data. They take text and convert it into dense vector embeddings – essentially, long lists of numbers that represent the semantic meaning of the text. These embeddings can then be compared to find similar data points, making them ideal for search and retrieval tasks.

Harnessing the Power of Ollama Embeddings

Ollama offers a variety of pre-trained embedding models, including mxbai-embed-large, nomic-embed-text, and all-minilm. These models are ready to use for generating vector embeddings from your text prompts.

Here's a glimpse of how Ollama makes embedding workflows a breeze:

- Multiple Usage Options: Generate embeddings through the user-friendly REST API, Python libraries, or Javascript libraries, whichever suits your development style.

- Seamless Integration: Ollama integrates with popular tools like LangChain and LlamaIndex, streamlining your embedding workflows.

Building a RAG Application with Ollama Embeddings

Let's dive into a practical example: creating a RAG application that retrieves relevant information and uses it to generate text.

We'll build a system that answers questions about llamas. First, we'll store documents related to llamas in a vector embedding database using Ollama's embeddings functionality. Then, when a user asks a question like "What animals are llamas related to?", Ollama will:

- Generate an embedding for the question.

- Retrieve the most relevant document from the database based on embedding similarity.

- Use the retrieved document and the original question to generate a comprehensive answer using Ollama's generation capabilities.

The Future of Ollama Embeddings

Ollama's commitment to empowering developers doesn't stop here. Stay tuned for exciting upcoming features like:

- Batch Embeddings: Process multiple prompts simultaneously for efficient data handling.

- OpenAI API Compatibility: Leverage the familiar OpenAI /v1/embeddings endpoint within Ollama.

- Expanded Model Support: Explore a wider range of embedding model architectures, including ColBERT and RoBERTa.

With Ollama's embedding models, you can unlock powerful search and retrieval functionalities in your applications. Get started today and explore the potential of RAG for your next project!

No comments:

Post a Comment