Unlocking the Future of Multi-Agent Systems: TextGrad and Textual Gradient Descent

In recent years, the evolution of large language models (LLMs) has moved forward rapidly. We've become proficient at training extensive networks or different combinations of networks through backpropagation. However, the landscape is changing with multi-agent systems now comprising combinations of LLMs and tools that do not form a differentiable chain. The nodes in these computational graphs, which include LLMs and tools, are connected via natural language interfaces (communicating through text) and often reside with different vendors in various data centers, accessible only through APIs. This begs the question: is backpropagation obsolete? Not quite.

Introducing TextGrad

TextGrad implements a backpropagation analog but through text and textual gradients. Let's break it down with a simple example. Suppose there are two LLM calls, and we aim to optimize the prompt in the first call:

- Prediction: `Prediction = LLM(Prompt + Question)`

- Evaluation: `Evaluation = LLM(Evaluation Instruction + Prediction)`

For this chain, we can construct a backpropagation analog using a gradient operator ∇LLM. This operator is based on LLM and mirrors the Reflection pattern, providing feedback (critique, reflection) on how to modify a variable to improve the final objective, such as: “This prediction can be improved by...”.

Within ∇LLM, we show the "forward pass LLM" through a prompt like “Here is a conversation with an LLM: {x|y}”, insert the critique “Below are the criticisms on {y}: {∂L/∂y}”, and finally, “Explain how to improve {x}.”

In our two-call example, we first calculate:

This gives us instructions on how to adjust `Prediction` to improve `Evaluation`. Next, we determine how to adjust `Prompt`:

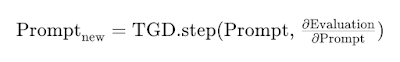

This forms the basis of a gradient optimizer called Textual Gradient Descent (TGD), which operates as follows:

The TGD.step(x, ∂L/∂x) optimizer is also implemented through LLM and essentially uses a prompt like “Below are the criticisms on {x}: {∂L/∂x} Incorporate the criticisms, and produce a new variable.” to generate a new value for the variable (in our case, Prompt).

In practice, the operator prompts are more sophisticated and could theoretically be found using textual gradient descent, though this has not been demonstrated yet.

Versatile and Comprehensive Applications

This method allows for more complex computations defined by arbitrary computational graphs, where nodes can involve LLM calls, tools, and numerical simulators. If a node has multiple successors, all gradients from them are collected and aggregated before moving forward.

A significant aspect is the objective function, which, unlike traditional backpropagation, is often non-differentiable and described in natural language, evaluated through LLM prompts. For example, in coding:

Loss(code, target goal)=LLM(“Here is a code snippet: code. Here is the goal for this snippet: target goal. Evaluate the snippet for correctness and runtime complexity.”)

This is both universal and flexible, providing a fascinating approach to defining loss functions in natural language.

Case Studies and Results

- Coding Tasks: The task was to generate code solving LeetCode Hard problems. The setup was: `Code-Refinement Objective = LLM(Problem + Code + Test-time Instruction + Local Test Results)`, where Code was optimized through TextGrad, achieving a 36% completion rate.

- Solution Optimization: This involved enhancing solutions to complex questions in Google-proof Question Answering (GPQA), like quantum mechanics or organic chemistry problems. TextGrad performed three iterations with majority voting, resulting in a 55% success rate, surpassing previous best-known results.

- Prompt Optimization: For reasoning tasks from Big Bench Hard and GSM8k, the goal was to optimize prompts using feedback from a stronger model (gpt-4o) for a cheaper one (gpt-3.5-turbo-0125). Mini-batches of 3 were used across 12 iterations, with prompts updated upon validation improvement, outperforming Zero-shot Chain-of-Thought and DSPy.

- Molecule Optimization: Starting from a small fragment in SMILES notation, affinity scores from Autodock Vina and druglikeness via QED score from RDKit were optimized using TextGrad for 58 targets from the DOCKSTRING benchmark, producing notable improvements.

- Radiotherapy Plan Optimization: This involved optimizing hyperparameters for treatment plans, where the loss was defined as `L = LLM(P(θ), g)`, with g representing clinical goals, yielding meaningful results.

Conclusion

TextGrad offers an intriguing, universal approach applicable across various domains, from coding to medicine. The methodology has been formalized into a library with an API similar to PyTorch, promising a bright and interesting future. Expanding this framework to include other modalities like images or sound could be exciting, along with further integrating tools and retrieval-augmented generation (RAG).

No comments:

Post a Comment