The Mistral AI team is thrilled to announce the release of Mistral Large, our latest and most sophisticated language model yet. Designed to set a new standard in AI capabilities, Mistral Large is now accessible through La Plateforme and Azure, marking a significant milestone as our first distribution partnership.

Introducing Mistral Large: The New Benchmark in AI

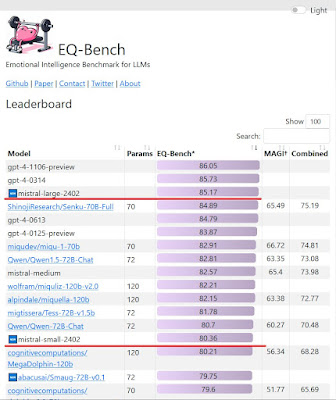

Mistral Large stands as our pioneering text generation model, crafted to excel in complex multilingual reasoning tasks. Its exceptional capabilities encompass text understanding, transformation, and even code generation. By achieving impressive results on widely recognized benchmarks, Mistral Large proudly ranks as the world's second-leading model available via API, only next to GPT-4.

A Closer Look at Mistral Large's Capabilities

Mistral Large is not just another language model; it represents a leap forward in AI technology:

- Multilingual Mastery: Fluent in English, French, Spanish, German, and Italian, Mistral Large understands the nuances of grammar and cultural context like no other.

- Extended Context Window: With a 32K tokens context window, it ensures unparalleled precision in recalling information from extensive documents.

- Advanced Instruction Following: Its ability to follow instructions precisely allows developers to tailor moderation policies effectively.

- Native Function Calling: This feature, along with a constrained output mode, facilitates large-scale application development and tech stack modernization.

Partnering with Microsoft: Bringing AI to Azure

In our mission to make frontier AI ubiquitous, we're excited to announce our partnership with Microsoft. This collaboration brings our open and commercial models to Azure, showcasing Microsoft's trust in our technology. Mistral Large is now available on Azure AI Studio and Azure Machine Learning, offering a seamless user experience akin to our APIs.

Deployment Options for Every Need

- La Plateforme: Hosted on Mistral’s secure European infrastructure, offering a wide range of models for application and service development.

- Azure: Access Mistral Large through Azure for a seamless integration experience.

- Self-Deployment: For the most sensitive use cases, deploy our models in your environment with access to our model weights.

Mistral Small: Optimized for Efficiency

Alongside Mistral Large, we introduce Mistral Small, optimized for low latency and cost without compromising on performance. This model offers a perfect solution for those seeking a balance between our flagship model and open-weight offerings, benefiting from the same innovative features as Mistral Large.

What’s New?

- Open-Weight and Optimized Model Endpoints: Offering competitive pricing and refined performance.

- Enhanced Organizational and Pricing Options: Including multi-currency pricing and updated service tiers on La Plateforme.

- Reduced Latency: Significant improvements across all endpoints for a smoother experience.

Beyond the Models: JSON and Function Calling

To facilitate more natural interactions with our models, we introduce JSON format mode and function calling. These features enable developers to structure output for easy integration into existing pipelines and to interface Mistral endpoints with a wider range of tools and services.

Join the AI Revolution

Mistral Large and Mistral Small are available now on La Plateforme and Azure. Experience the cutting-edge capabilities of our models and join us in shaping the future of AI. We look forward to your feedback and to continuing our journey towards making advanced AI more accessible to all.

Benchmarks

Prices comparison

No comments:

Post a Comment