Beginning of the saga

what we know for now

- anon on /lmg/ posts a 70b model name miqu saying it's good

- uses the same instruct format as mistral-instruct, 32k context

- extremely good on basic testing, similar answers to mistral-medium on perplexity's api

- miqu uses the llama2 tokenizer, from basic testing mistral medium seems to be using it as well (anons are comparing prompt token sizes)

Mistral reaction

In a significant development, Arthur Mensch, co-founder and CEO of Mistral, acknowledged that an over-enthusiastic employee of one of their early access customers leaked a quantized and watermarked version of an older model. This revelation confirmed a connection between "miqu-1-70b" and Mistral's AI models, though not directly tying it to the current version of Mistral-Medium.Implications in the AI Community

The "miqu-1-70b" episode reflects the dynamic nature of AI development and distribution, especially in the open-source community. It underscores the challenges in controlling the dissemination of powerful AI models and sparks discussions about responsible sharing and usage of such technologies.

Model info:

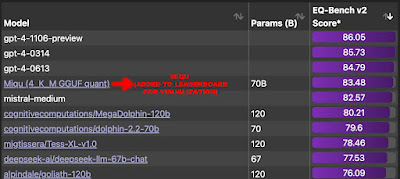

Benchmarks:

The model scores highly on MT-bench right after the Mistral-medium.

Models and HuggingFace

Conclusion

The "miqu-1-70b" model's emergence and the subsequent revelations have stirred excitement and debate within the AI community. It highlights the thin line between innovation and control in the rapidly evolving field of artificial intelligence. As the industry continues to grow, events like these provide valuable lessons and insights into the future of AI development and distribution.

No comments:

Post a Comment